In this post, I’m going to show how we can combine the image feature recognition algorithm I presented a few months ago, with the categorization and prediction algorithms I presented in the two posts below, to produce an algorithm that can quickly categorize images, without any supervision. The final algorithm I’ll present is O(n), where n is the number of pixels in the image we’re attempting to categorize.

This algorithm works so well that it can distinguish between paintings by different artists, in just a few seconds. It can also distinguish between articles of clothing, and other objects, but the focus of this post will be on its ability to distinguish between artists, because, in my opinion, this shows that the algorithm is capable of unsupervised abstraction, allowing it to say without any guidance, what it is that distinguishes a Van Gogh from a Caravaggio.

These algorithms use the same underlying categorization and prediction algorithms that I used below to imitate complex three-dimensional shapes, predict data, and approximate complex functions. In this case, I’ve added some additional scripts that allow the underlying algorithms to analyze images. As a result, the underlying categorization and prediction algorithms are shaping up to be a set of generalized AI algorithms that appear to only require a “hook” into the subject matter in question.

Finally, note that all of the algorithms I’ll present in this post are worst-case polynomial in time as a function of the number of pixels in the image. That is, even the algorithms we’ll use to construct categories in the first instance are polynomial in time as a function of the number of pixels / images in the database. As a result, these algorithms allow for what I believe to be industrial quality image recognition to be implemented on a consumer device, and can probably allow industrial machines to conduct real-time analysis of high-resolution video.

Summary of the Underlying Image Feature Identification Algorithm

In the post below entitled, “Image Recognition with no Prior Information”, I presented an algorithm that can quickly identify features within an image, without any prior information about the image, or the categories to which the image might belong. At a high level, this algorithm takes an image, and partitions it into objectively distinct features. But because the algorithm operates with no prior information at all, it isn’t looking for a particular object, but is instead looking for every objectively distinct feature within an image. In short, this first algorithm attempts to identify all of the features within an image, but because this algorithm has no prior information, it can’t say what these features are, but can nonetheless identify them as distinct from one another. As a result, this algorithm is only the first step of the image processing algorithms I’ll present in this post.

Like all of the other work that is part of this project, the feature recognition algorithm is rooted in information theory, and operates based upon the assumption that objectively distinct features within an image will contain different amounts of information. Specifically, the feature identification algorithm subdivides an image in a way that maximizes the difference in the amounts of information contained in each subdivision of the image. I call each subdivision of an image generated by the algorithm a region. Though it might seem pedantic to distinguish between a region and a feature, I’d like to reserve the term feature to denote an actual feature of an image, as identified by a human, whereas a region is a portion of an image identified by the algorithm that should contain a feature.

The first, second, and third attached images are examples of an image that has been processed by the algorithm. The first image is a photo I took in Lake Como, Italy, and the second image shows the original image after being processed, with the brightness of each region adjusted by the algorithm to reflect how likely it thinks the region is to be part of the foreground of the image. The brighter a given region is, the more likely the algorithm thinks that the region is part of the foreground of the image. You can get a sense of how the algorithm partitions an image by looking at the boundaries of the regions in the second image. The third image shows a specific region identified by the algorithm, which is in this case an individual standing on a diving board, which can be found around the center of the original image.

You can do this yourself using the following code:

pkg load communications

pkg load image

I = imread(“[IMAGE FILE]”);

[region_matrix N S_mat prob_mat] = identify_features(I);

imshow(ent_contrast_darken_file(I,N,region_matrix, prob_mat));

figure, imshow(extract_region(I,N,region_matrix,27));

Constructing a Tractable Color Palette

At a high level, the technique we’ll employ is straightforward: first we’re going to partition an image using the feature identification algorithm described above, and that’s going to produce a series of regions, which should correspond to features within the image. Then, we’re going to calculate the average color of each region, which will create a dataset of average colors. Then, we’re going to categorize those colors using the vector categorization algorithm I presented in the two posts just below this one.

In this section, I’ll walk through applying this process to a single image. In the next section, we’ll apply it to a series of images. In the last section, I’ll present a vectorized implementation of this entire process that will allow us to categorize new images using an algorithm that is O(n).

I’ve attached a photograph of one of Van Gogh’s iconic self-portraits that we’ll use as our first subject.

First, we’ll count the number of unique colors in the image using a vectorized script I found on Matlab’s website, written by Steve Eddins, which is available here:

https://blogs.mathworks.com/steve/2008/01/31/counting-occurrences-of-image-colors/

I = imread(“[IMAGE FILE]”);

rgb_columns = reshape(I, [], 3);

[unique_colors, m, n] = unique(rgb_columns, ‘rows’);

color_counts = accumarray(n, 1);

size(unique_colors, 1)

The image contains 184064 unique colors, and since the image contains 768*608 = 466944 pixels, about 40 percent of the pixels contain unique colors. As a result, the image contains a very rich color palette, and therefore, quite a lot of color information for its size. Nonetheless, a human observer probably wouldn’t have much trouble quickly describing the color palette of the painting, which contains a lot of yellow, due to his large hat, with a pinkish and orange background, and some pale blues in his blazer.

This type of description is a form of compression, since we’ve taken a large amount of color information, and compressed that information into a tractable statement that is nonetheless a meaningful description of its subject. Similarly, the goal of this section will be to produce a compressed color palate that straddles the line the between a set of colors that is digestible by a human, and a set of colors that is useful to a machine. The color sets we’ll produce will be too large to be described in one sentence, but small enough to be expressed visually in a single color bar that can be quickly gleaned by a human. The intended end result is to have the categorization algorithm operate like an enhanced human, that quickly compresses information, but nonetheless has access to details that a human observer would find overwhelming.

Continuing with the example of the self-portrait, first we’ll run the feature identification algorithm, which will produce a set of matrices we can use to partition the image into regions. Then, we’ll run a script that calculates and stores the average color of each region, which will produce a dataset of color vectors. Finally, we’ll run the categorization algorithm on that dataset of color vectors, which will produce a core set of color vectors that can be thought of as forming a basis for the colors in the image. I call these basis vectors the anchors of their color categories, since the categorization algorithm treats each anchor vector as the geometric center of a category, meaning that all other vectors in the category defined by the anchor are within some distance, delta, that the algorithm calculates based upon a variety of factors that I explain in the two posts below this one.

All of this can be done using the following code:

[region_matrix N S_mat prob_mat] = identify_features(I);

figure, imshow(ent_contrast_darken_file(I,N,region_matrix,prob_mat));

data_array = calculate_average_feature_color(I,N,region_matrix, prob_mat, 0, 0);

[data_categories_array category_vec anchor_array H_final delta] = optimize_categories_3D(data_array,0);

color_bar_compressed = display_color_array(anchor_array, data_categories_array);

figure, imshow(color_bar_compressed)

The feature identification algorithm generates 94 regions, and the color bar shows the compressed set of colors generated by the categorization algorithm, which consists of 44 anchor colors. Visually, this set of colors is clearly representative of the underlying painting, despite containing only about .02% of the unique colors in the image. As a result, even though average color is generally a very lossy metric for an image, because we’ve taken the average color of what are objectively distinct features of the image, we’ve ended up with meaningful color information, while nonetheless achieving an enormous amount of compression. Not to boast, but this is actually quite remarkable, since the algorithm has taken over one hundred thousand colors from an image, and produced, without any supervision, a set of 44 colors that are perfectly representative of the underlying image. Moreover, it would almost certainly be impossible for a human to sift through all of the colors in the original image and select a comparable set of colors. At the same time, these are the colors that a human probably would select if they could. As a result, this process generates the same type of compression a human-generated, verbal summary achieves, but on a mechanized scale, and expressed nonverbally, in the form of an image. Interestingly, it also suggests that nonverbal summaries of this type could allow machines to convey complex information to their human counterparts.

This of course does not preclude more sophisticated measures from being used in lieu of average color. Instead, I’m presenting this as a simple example of a more generalized approach to image recognition that consists of three primary steps:

(1) identify the features of an image using the feature identification algorithm described above;

(2) analyze the properties of each resulting region, producing a metric;

(3) categorize the resulting metrics.

If our metric is visually meaningful, then the categories we’ll construct in step three will cause the metrics associated with similar looking features to be categorized together, thereby creating categories of similar looking features. It turns out that average color works quite well, though there are undoubtedly variations on this theme that might be more useful in other contexts.

Categorizing Images

By applying the process above to a category of similar images, we can construct a palette that is representative of an entire category of images. In this case, we’re going to construct two palettes: one generated by analyzing a series of paintings by Van Gogh, and another generated by analyzing a series of paintings by John Singer Sargent. These two painters worked with somewhat similar color schemes, and to a non-expert like myself, a superficially similar impressionistic style. Nonetheless, the paintings I’ve selected are distinguishable, and as a result, the two categories of paintings will produce different color palettes. We’ll analyze a set of 8 paintings by each artist, for a total of 16 images, each of which are attached as the last 16 images.

We can produce the palettes using the following code:

directory_path_A = “[Van Gogh]”;

directory_path_D = “[Sargent]”;

num_images_A = 8;

num_images_D = 8;

[category_tree1 delta_tree1 anchor_tree1 data_array1] = generate_single_path_img_data(directory_path_A, num_images_A);

[category_tree4 delta_tree4 anchor_tree4 data_array4] = generate_single_path_img_data(directory_path_D, num_images_D);

[data_categories_array1 category_vec1 anchor_array1 H_final1 delta1] = optimize_categories_3D(data_array1,0);

[data_categories_array4 category_vec4 anchor_array4 H_final4 delta4] = optimize_categories_3D(data_array4,0);

color_bar_compressed1 = display_color_array(anchor_array1, data_categories_array1);

color_bar_compressed4 = display_color_array(anchor_array4, data_categories_array4);

figure, imshow(color_bar_compressed1)

figure, imshow(color_bar_compressed4)

The Van Gogh set of images generates 711 features, ultimately compressed into a set of 268 anchor colors, while the Sargent set of images generates 600 features, which were compressed into a set of 243 anchor colors. Yet again, these algorithms achieve an incredible amount of compression, taking millions of colors in the underlying images, and generating a comparatively minuscule set of colors that a human being can easily observe and intuitively understand, albeit in a nonverbal manner.

The Van Gogh palette consists of an array of rich, and bright pastels, whereas the Sargent palette consists of what I thought to be a surprisingly dark set of colors, mixed in with brighter colors that are nonetheless closer to the grey portion of the spectrum when compared to the vibrant colors that make up the Van Gogh palette.

If we were to take yet another Van Gogh painting, and compare the color palette of that new painting to the Van Gogh palette we just produced, it would probably be a decent match. Moreover, the color palette of the new Van Gogh would almost certainly be a better match for the existing Van Gogh palette than the Sargent palette. As a result, if we’re given a new image, and we assume that the new image is either a Van Gogh or a Sargent, then by comparing the color palette of the new image to the two color palettes we just produced, we should be able to categorize that new image as either a Van Gogh or a Sargent.

The image categorization algorithm I’ll present in this section is O(n*log(n)), since we’ll need to run the feature identification algorithm on the new input image in order construct its color palette. In the next section, I’ll present a vectorized version of this algorithm that is O(n), that takes into account the color of every pixel in the image. As a result, the vectorized algorithm is not only faster, but it’s also more powerful, since it takes into account all of the color information within an image. Nonetheless, the intuition and inspiration for the vectorized algorithm comes from this longer form process.

Let’s begin by generating a color palette for the beardless Van Gogh self-portrait, which I’ve attached, using the same code above from the previous section. Though this image was not part of the database that generated the Van Gogh palette, just looking at it, we can already see that it’s probably going to fit well. Not surprisingly, the resultant color palette looks a lot like a subset of the color palette for the entire Van Gogh series. As a result, it makes sense to assume that this set of colors will be a better fit for the Van Gogh palette than for the Sargent palette.

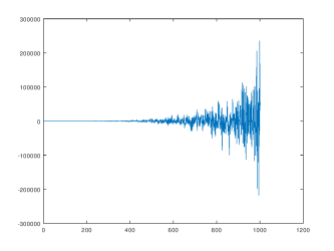

We can test this hypothesis using a prediction function I presented in the previous post, that takes a new data item, and attempts to categorize it into an existing dataset that’s already been analyzed using the categorization algorithm. At a high level, what the prediction algorithm does is attempt to place the new data item into an expanded set of anchor vectors that are structured as a tree. If it can place the new data item somewhere in the tree, then the new data item is a good match for the dataset. If it fails to place the new data item in the tree, then the new data item is not a good match for the dataset. For a full explanation as to how and why the prediction algorithm works, see the two posts just below this one.

In this particular case, we can think of the prediction algorithm as attempting to fit each new feature color into an existing dataset of anchor colors. The greater the number of feature colors that match with a given dataset of anchor colors, the more confident we can be that the new image is, as a whole, a match for that dataset in terms of its color palette. Of course, knowing that two images have similar color palettes is not, as a general manner, enough information to conclude that the two images belong to the same category of images or objects. But in this case, we’re tacitly assuming that the painting is either a Van Gogh or a Sargent, and then determining to which category the painting belongs by comparing a compressed representation of its color palette to the Van Gogh and Sargent palettes we just compiled.

This can be done using the following code:

[region_matrix N S_mat prob_mat img_data_array score_vector1 delta_vector1] = AB_test_image(I, category_tree1, anchor_tree1, delta_tree1);

[region_matrix4 N4 S_mat4 prob_mat4 img_data_array4 score_vector4 delta_vector4] = AB_test_image(I, category_tree4, anchor_tree4, delta_tree4);

sum(score_vector1 != Inf)

sum(score_vector4 != Inf)

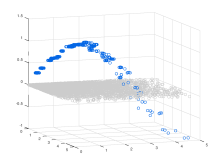

In short, the underlying prediction algorithm will attempt to place the average color of each region from the new image into each dataset. If it succeeds, it will return a score of 0, and if it fails, it will return a score of Inf, which indicates that the algorithm thinks that the new color doesn’t belong to the dataset. The last two lines of code count how many regions matched with the two datasets. In this case, the new Van Gogh matched with the Van Gogh dataset 50 times, and matched with the Sargent dataset 40 times. Each test involved attempting to place 80 regions, meaning that in both cases, a significant number of regions failed to categorize.

Though this approach works reasonably well, note that it tests whether the average color of an entire region is a good fit for the color palette in question. As a result, the test does not take into account the vast majority of color information in an image, since each region is going to contain a large number of pixels. In the next section, we’ll vectorize this algorithm, which will allow us to quickly test every pixel in an image, thereby creating a simple filter that can test whether the colors in an image fit well within the complex, and discontiguous sets of colors that comprise the palettes we’ve constructed. Ultimately, this will allow us to quickly categorize images using a simple filtering process.

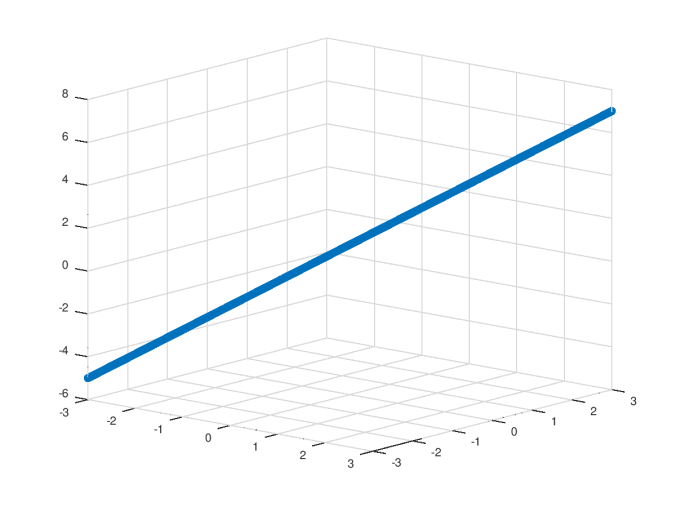

Vectorized Image Categorization

The first three steps in the process above cannot, to my knowledge, be vectorized. That is, if we want to construct a color palette for a database of images, we need to run the feature identification algorithm, calculate the average color of each resulting feature, and then categorize the resulting dataset of colors. However, if we’d like to compare the colors within an image to that color palette, after it’s already been produced, then we can take advantage of the ready-baked vectorized procedures of Matlab and Octave, and possibly other languages as well.

In short, what we’ll do is simply test whether each pixel in an image is roughly within delta of at least one anchor color. If the pixel is within delta of some anchor, then it will pass the filter. If it is not within delta of some anchor, then it will not pass the filter. By calculating the percentage of pixels that survive filtration using this method, we can measure what percentage of pixels in the image are a match for the color palette in question. This in turn allows us to quickly determine to which category of images a given input image belongs, by choosing the category associated with the filter that had the highest pixel survival rate. That is, the input image is best suited in terms of color with the underlying category of images that generated the filter that does the least damage.

For example, if we’d like to determine whether a given image is a Van Gogh or a Sargent, we can use the color palettes we generated above, and test that new image using a Van Gogh filter and a Sargent filter. The filter with the higher pixel survival rate is the better fit, and therefore tells us which of the two painters is most likely to have held the brush.

Returning to the beardless Van Gogh portrait we tested above, we can apply a Van Gogh filter and a Sargent filter to that image using the following code:

%the Van Gogh filter

[not_mask mask] = generate_category_mask(I, anchor_tree1, delta_tree1);

I_Post = I.*mask;

figure, imshow(I_Post)

num_on_pixels = sum(mask(:) == 1);

[row col x] = size(I);

num_pixels = row*col;

survival_rate1 = num_on_pixels/num_pixels

%the Sargent filter

[not_mask mask] = generate_category_mask(I, anchor_tree4, delta_tree4);

I_Post = I.*mask;

figure, imshow(I_Post)

num_on_pixels = sum(mask(:) == 1);

[row col x] = size(I);

num_pixels = row*col;

survival_rate4 = num_on_pixels/num_pixels

The survival rate for the Van Gogh filter is 0.29383, or roughly 30% of the pixels, whereas the survival rate for the Sargent filter is 0.13676, or roughly 14% of the pixels.

As a general matter, this approach works astonishingly well, and gives wide margins of survival rates even in cases like this, where we’re distinguishing between similar images that are, frankly, difficult for even some humans to categorize. For example, if we’d like to A/B test for whether an image contains one of two dresses, then this approach will work, assuming that the two dresses have objectively distinct color palettes, even if they’re superficially quite similar to a human observer. Since this approach is not necessarily restricted to color, I believe this algorithm could mark the beginning of an entirely new approach to image recognition.

Octave Scripts:

calculate_average_feature_color